Trisul Blog

From missing NAT logs to silent storage failures, one overlooked detail can derail months of IPDR compliance work. Here’s how ISPs can stay audit-ready without burning out.

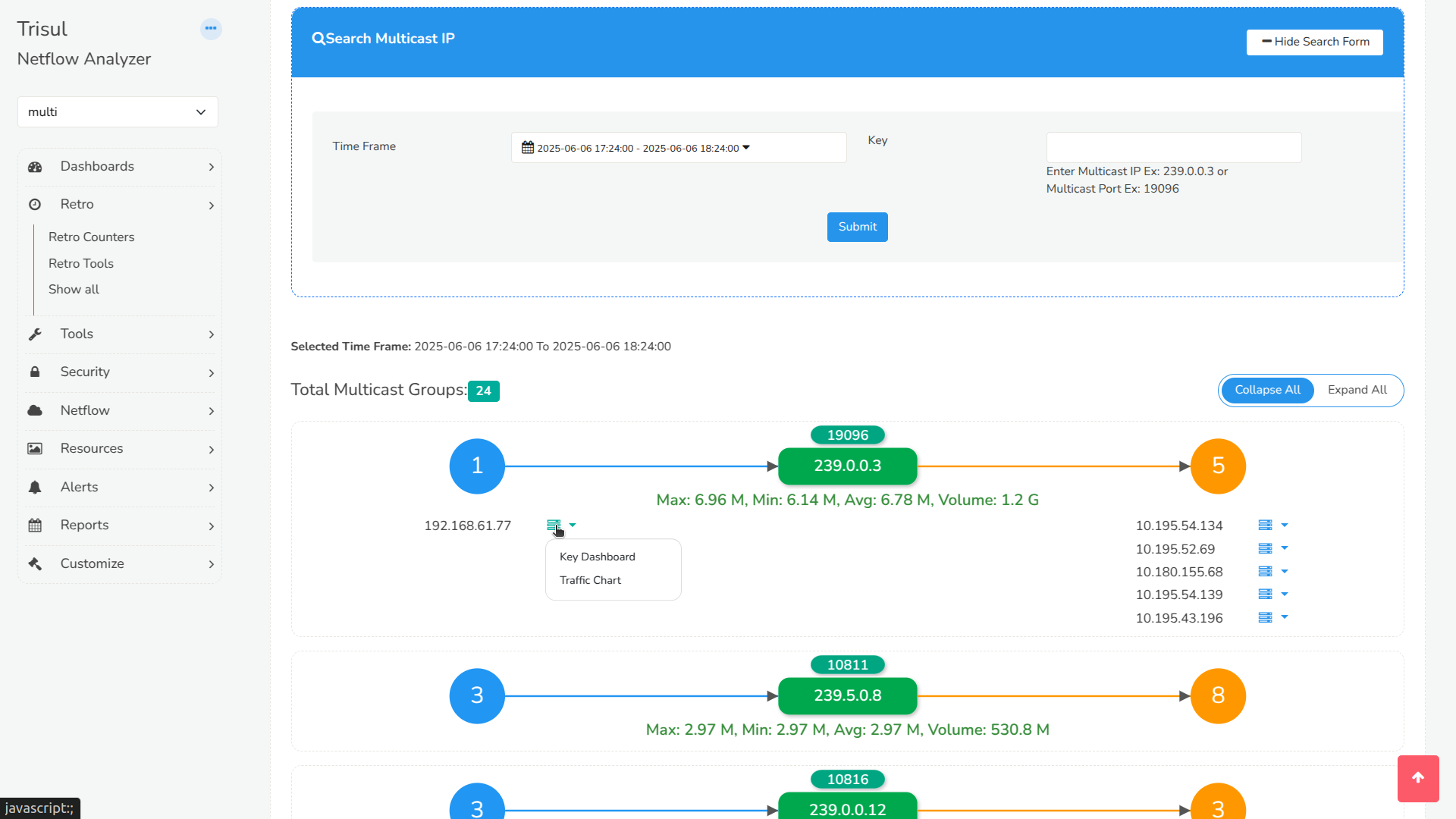

Multicast traffic has long been a black box in network visibility. With Multicast GraphX, Trisul gives you an interactive visual map of multicast groups, senders, receivers, multicast traffic metrics.

Imagine a world where your AI doesn’t just show you data but tells you what it means. CrewAI is ushering in that reality—one agent, one task, one insight at a time.

Your Fortinet devices already know who's on the network—Trisul just makes that knowledge actionable. Learn how to bridge security logging with flow analytics.

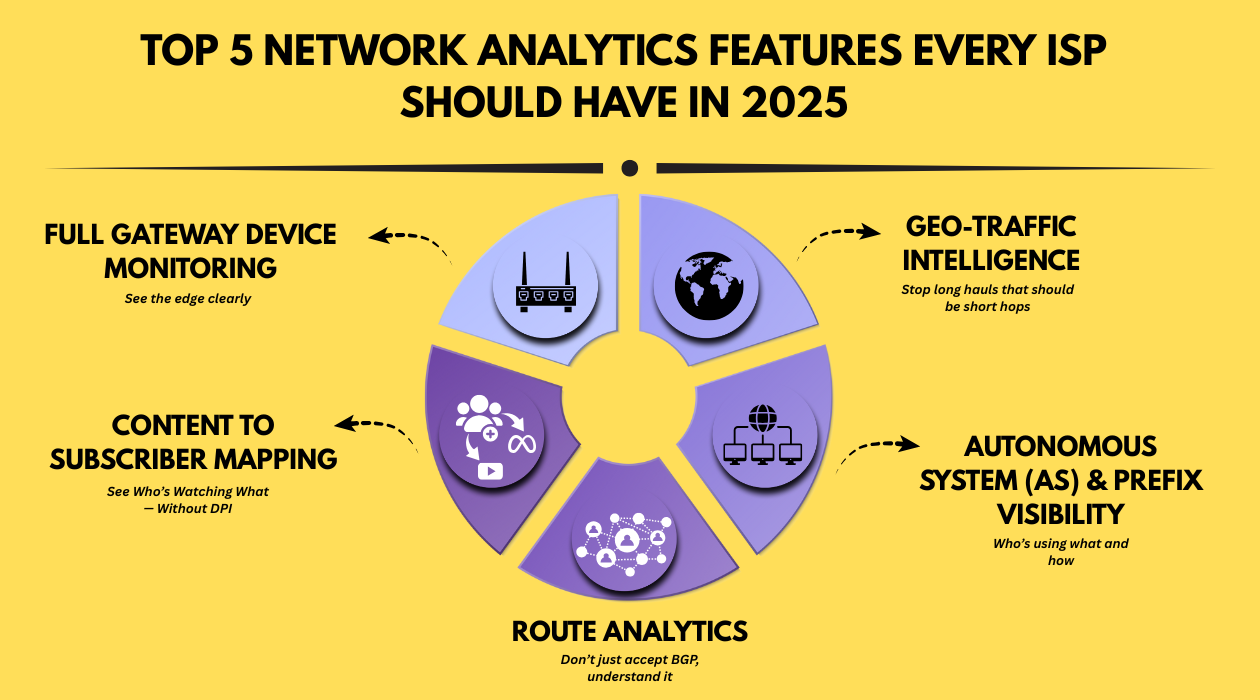

Imagine being able to pinpoint network congestion in real-time, optimize traffic flow, and deliver seamless internet experiences to your customers. Sounds like a dream? With the right network analytics features, it's a reality.

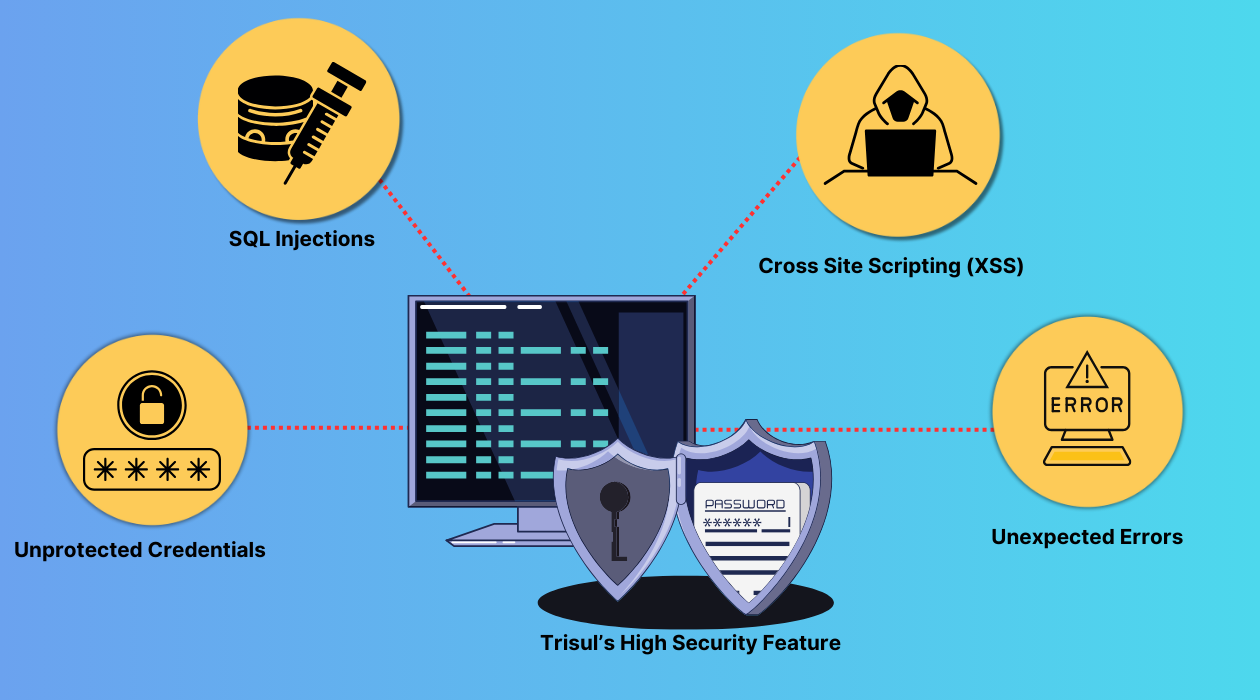

With Trisul's High Security Feature, you can protect your system against common vulnerabilities such as XSS attacks, SQL injection, and error handling exploits.

Our latest Trisul Network Analytics release takes your network management to the next level with precise geolocation for satellite internet, enhanced security through rigorous VAPT testing, and the new Crosskey Tree module for advanced data visualization. Now available on Ubuntu 24.04 LTS, with an Active Directory Connector for user-level tracking. Explore all the details in the full release notes!

We are giving back to the Network Monitoring community by open sourcing nflossmon. A linux command line tool to measure packet loss of NetFlow feeds. It is designed to run unobtrusively on the same server the NetFlow analyzers run. Get the free tool now and measure if your NetFlow feeds are reliable.

Experiencing traffic chart display issues? Download our latest Trisul hotfix to resolve the issue and ensure uninterrupted insights.

Explore the key highlights of Trisul Network Analytics' 2024 releases, from scalability and user experience enhancements to groundbreaking features that simplify network monitoring and optimization.