Trisul AI CLI 0.2.0 Launch: Your Adaptive Network Intelligence in 2026

Let me start with a simple question.

When you’re trying to understand what’s happening on your network, what actually slows you down?

It’s almost never the lack of data.

It’s the distance between the question in your head and the place where the answer lives.

That’s the gap we started pushing on in our last update.

We shared an early look at a conversational way to interact with Trisul. An update of working direction we wanted to test in the real world. The idea was straightforward. What if asking the network a question felt as natural as asking a teammate sitting next to you?

Fast forward to now, and that idea has moved from a glimpse to something people can actually use.

Since that first preview, the idea has been actively exercised inside Trisul. What’s changed since then isn’t the direction, but the depth. The AI CLI now goes beyond interpreting a question. Reaching into remote hubs, pulling live and historical telemetry, correlating alerts, comparing traffic across regions, and turning the result into something you can actually share.

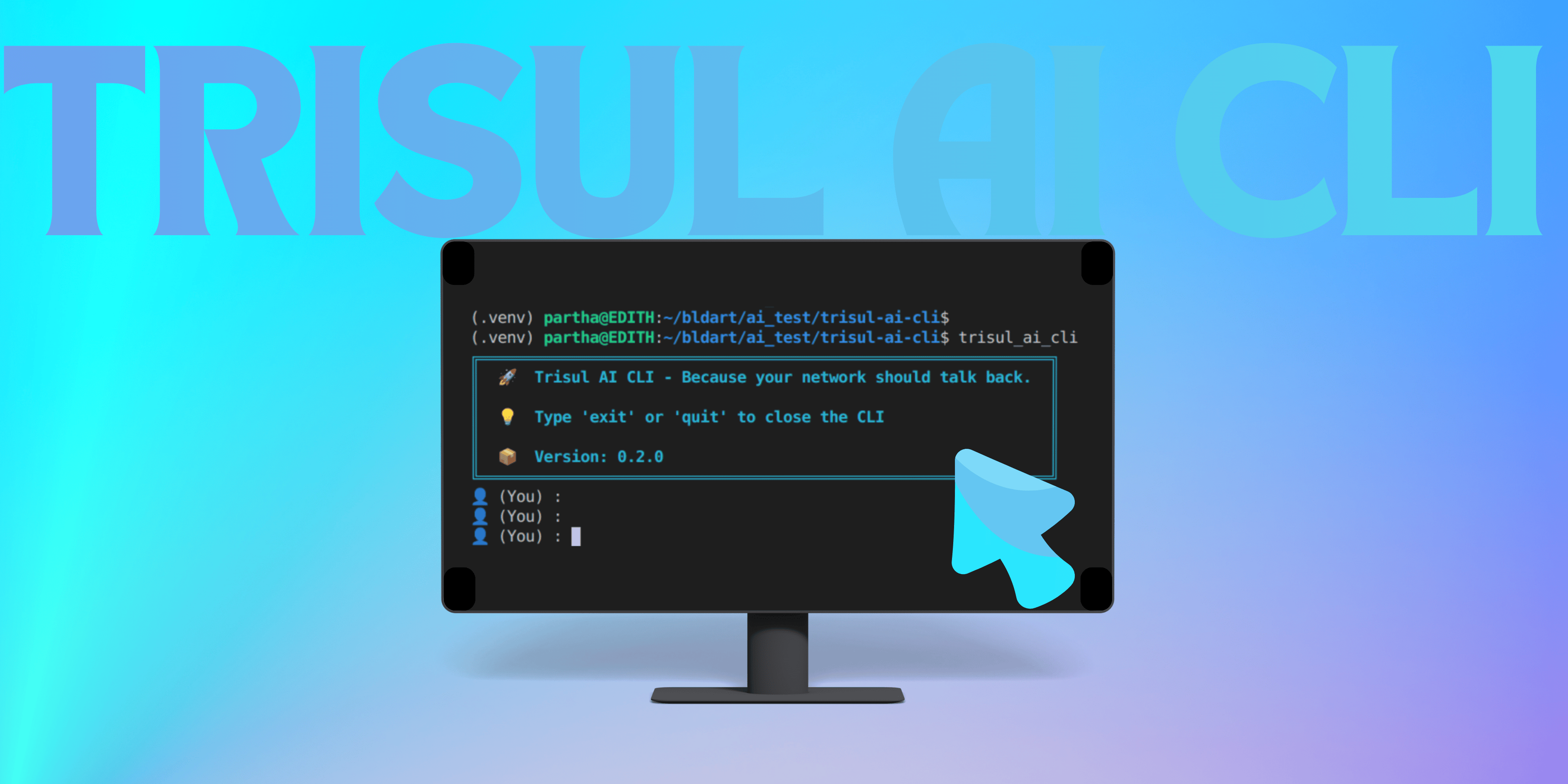

Today, we’re releasing Trisul AI CLI 0.2.0

This isn’t a preview, a concept, or an experiment anymore. It’s a production-ready way to interact with live network telemetry using natural language, built directly into Trisul’s analytics engine.

In the rest of this post lets look at how Trisul AI CLI approaches these questions in practice.

Adaptive Reasoning with a Pluggable Multi-LLM Approach

If you look at how AI is embedded into most network analytics platforms today, a clear pattern emerges.

The AI layer is usually built around a single model. That model explains dashboards, summarizes alerts, and answers questions in natural language. One model, one behavior profile, one set of trade-offs.

That approach is simple and predictable. But once Trisul AI CLI started handling real operational questions, its limits became obvious.

Network questions don’t all behave the same way.

For example:

Say for “What changed on the network after 10 PM?”

In a single-model system, this usually turns into a snapshot.

The question is effectively interpreted as:

“Describe what the network looked like after 10 PM.”

So the answer sounds like:

“After 10 PM, traffic levels increased and several alerts were observed across the network.”

That’s not wrong.

But it’s descriptive. It looks at the network after 10 PM in isolation.

With multiple models in play, the same question is interpreted differently.

The system treats it as:

“Compare the network before and after 10 PM and explain the difference.”

So the answer shifts to something like:

“Traffic patterns after 10 PM differ from earlier periods, with new alert types appearing and traffic distribution changing across specific hosts.”

Same data. Same question. So what actually changed?

One approach answers:

“What does the network look like?”

The other quietly asks a better follow-up:

“What changed compared to before?”

Nothing new was added. No extra telemetry. No additional dashboards.

Just a shift in how the question was reasoned about.

And that shift is often the difference between reading the network and actually understanding it. Most platforms accept that trade-off because their AI layer is designed as a conversational assistant sitting on top of analytics.

Trisul takes a different path.

In the AI CLI, the model isn’t just explaining data. It participates in how data is retrieved, correlated, and shaped into an answer. Once AI moves closer to the analytics engine itself, the differences between questions start to matter.

That’s why Trisul was designed with Multi-LLM support.

Different models bring different strengths:

- Gemini for correlation-heavy reasoning where structure matters

- GPT-4 for fast metric extraction and exploratory queries

- Claude for responses that require consistency, clarity, and policy-grade precision

Rather than forcing every question through a single reasoning lens, Trisul is designed to support multiple LLMs, each bringing a different way of reasoning about network data.

There’s one more layer to that intelligence: Behavioral Memory.

Over time, the AI CLI learns how you work with the kinds of questions you ask. Whether you usually want a quick answer or a deeper breakdown. Whether you tend to explore first or jump straight to validation. The output can adapt to prior interactions, preferred levels of detail, and investigation patterns.

This is where personalization starts to matter.

Not in a cosmetic way, but in a practical one. Fewer clarifying questions. Less back-and-forth. Answers that feel like they’re meeting you where you already are.

𝗥𝗲𝗺𝗼𝘁𝗲 𝗦𝗲𝗿𝘃𝗲𝗿 𝗜𝗻𝘁𝗲𝗹𝗹𝗶𝗴𝗲𝗻𝗰𝗲 (𝗠𝘂𝗹𝘁𝗶-𝗛𝘂𝗯, 𝗠𝘂𝗹𝘁𝗶-𝗖𝗼𝗻𝘁𝗲𝘅𝘁)

Network questions rarely live in one place.

Traffic might originate in one region, spike in another, and trigger alerts somewhere else. But most tools still force you to investigate them one system at a time.

Trisul AI CLI breaks that mental tax.

So a question like:

“Compare traffic patterns between our Tokyo and London data centers”

doesn’t turn into a scavenger hunt.

No hopping between dashboards.

No exporting and stitching reports.

No “I’ll check that later when I have access.”

The AI CLI reaches out to the relevant hubs, aligns time windows, normalizes counters, and brings the comparison back as a single answer. The answer comes back as one coherent view, even though the data lives continents apart.

𝗠𝗖𝗣 (𝗠𝗼𝗱𝗲𝗹 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 𝗣𝗿𝗼𝘁𝗼𝗰𝗼𝗹) 𝗦𝗲𝗿𝘃𝗲𝗿

This is the layer that turns AI from a commentator into a participant.

Without MCP, an AI can only talk about network data. With it, the AI can actually work with it. Queries become actions. Questions become retrievals. Answers are grounded directly in live telemetry instead of summaries or approximations.

With this layer, Trisul’s internals become directly usable by the AI, not as text or summaries, but as live, queryable building blocks. Through TRP (Trisul Remote Protocol) over ZeroMQ, specialized MCP tools for flows, alerts, and sessions, and real-time protobuf parsing, the AI is able to pull exactly what a question demands, nothing more, nothing less.

Why this matters is simple.

When an AI can reach into counter groups, dynamically create or combine them, and operate over evolving SQL schemas, it no longer has to approximate answers. It doesn’t describe the network from a distance. It touches the same telemetry an engineer would, just without the manual steps.

This is where the AI stops being a layer on top of Trisul and starts feeling like it’s wired into the system itself.

Making this work in practice meant dealing with tight performance envelopes, live telemetry, and constantly evolving schemas. Much of that groundwork was shaped by our dev, Partha, whose deep dives into the constraints and trade-offs helped turn the design into something deployable. You can check his article where he’s documented that layer in more detail here: Partha’s LinkedIn Article.

𝗦𝘆𝘀𝘁𝗲𝗺 𝗣𝗿𝗼𝗺𝗽𝘁 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴: 𝗙𝗿𝗼𝗺 𝗚𝗲𝗻𝗲𝗿𝗶𝗰 𝘁𝗼 𝗡𝗲𝘁𝘄𝗼𝗿𝗸-𝗦𝗮𝘃𝘃𝘆

Out of the box, a LLM model doesn’t know what a FlowTracker GUID represents, which counters are meaningful, or why a timestamp mismatch can invalidate a query. Without that grounding, answers become vague, cautious, or overly verbose.

Trisul fixes this by anchoring the model in its domain.

System prompts provide Trisul-specific terminology, tool usage rules, and recovery behavior so the AI knows how to think inside the analytics engine, not just talk about it. Memory adds a final layer, adapting responses to how you usually investigate and how much detail you expect.

The result is answers that are grounded in Trisul’s semantics from the first response, not gradually corrected over a conversation.

Conclusion

Everything you’ve seen so far lives in the CLI by design. It’s the fastest way to build, test, and refine how AI should interact with real network telemetry. But it’s not the final form.

The same intelligence is now moving beyond the terminal into a Web UI, making these capabilities accessible across more teams and workflows without losing depth.

This is still evolving but the direction is set. And yes, there’s more coming!